ChatGPT, an AI chatbot program capable of writing in the human voice, is at the center of controversy concerning the ethics of AI, but another system should also be under scrutiny. Dall-E2 utilizes the same technology to generate artwork from written descriptions and shares commonalities with ChatGPT, including its developer.

Similar programs such as Midjourney, Stable Diffusion and and DreamUp learn by utilizing the dataset Laion-5B which provides access to more than 5 million images with captions, all taken from the internet. The new technology mimics the style of human artists through studying their artwork, which is easy to do through their datasets.

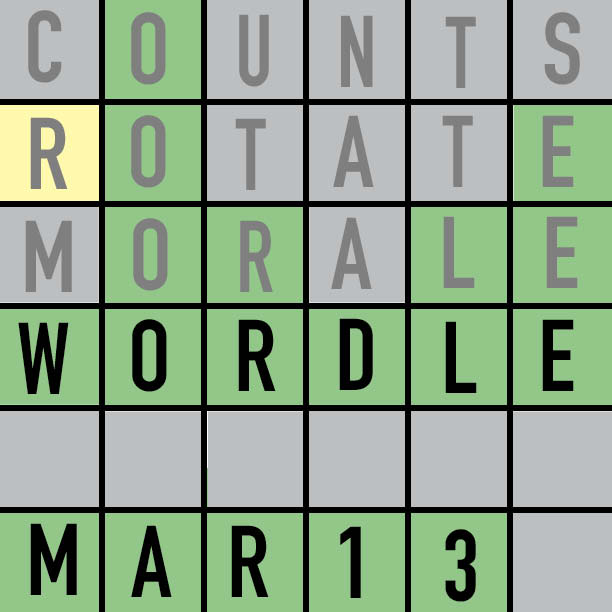

Michael Butterick, an attorney representing three artists in a lawsuit against AI image generators, told The New Yorker that the issue stemmed from “the three C’s.” Dall-E2 is a problem because of its lack of consent, compensation and credit to the artists that it relies on.

Alarmingly, anything and everything can be included in the datasets even without express permission. A site called Have I Been Trained, a recently-invented way to search Laion-5B, found an artist’s medical before-and-after pictures in the collection. While it’s impossible to know how medical imaging made it into Laion-5B, it proves that people have little control over what is included. In more mild cases, artists’ work is taken advantage of without their knowledge and/or approval.

What’s more, AI researchers found that art created by this technology is likely to reinforce gender and racial stereotypes as well as predominantly depict white people in its images. This is in part due to a lack of diversity in data sets which heavily pull from Western art as it monopolizes the fine art world. According to an article by Vox, prompts that included male-dominated jobs garnered results that almost exclusively portrayed men in these roles and vice-versa. For example, Dall-E2 largely presented white men when asked for pictures of lawyers and Asian women when asked for flight attendants. AI artwork could very well bring society backward and harm representation in artwork.

Dall-E2 is free to users as long as they stay under the allotted 50 prompts in the first month and 15 for subsequent months. However, if an account goes over that threshold, 115 prompts cost $15 and are usable for up to 12 months, a method known as a freemium model. It’s important to note that Dall-E2 is the only player generating income from any profits, even though its business is dependent on artists. Artists are unknowingly training a competitor that charges much less and is much faster and offers no reimbursement while threatening their sales.

Finally, Dall-E2 users are unaware of the artists the program is imitating and many can end up assuming the system is unassisted or only pulling from household names, such as Picasso and Van Gogh. The inner workings of AI imagery programs aren’t common knowledge and misconceptions only further erase artists’ contributions.

Dall-E2 and technologies similar to it should incorporate procedures that protect the privacy of artists and the overall population as well as pull from datasets with more diversity. Last but not least, a form of reference would be incredibly helpful to artists so AI imagery users can have access to the people AI has emulated. Doing so will promote safety online and the business of artists who are in danger of losing their business to AI.

![AI in films like "The Brutalist" is convenient, but shouldn’t take priority [opinion]](https://hilite.org/wp-content/uploads/2025/02/catherine-cover-1200x471.jpg)

![Review: “The Immortal Soul Salvage Yard:” A criminally underrated poetry collection [MUSE]](https://hilite.org/wp-content/uploads/2025/03/71cju6TvqmL._AC_UF10001000_QL80_.jpg)

![Review: "Dog Man" is Unapologetically Chaotic [MUSE]](https://hilite.org/wp-content/uploads/2025/03/dogman-1200x700.jpg)

![Review: "Ne Zha 2": The WeChat family reunion I didn’t know I needed [MUSE]](https://hilite.org/wp-content/uploads/2025/03/unnamed-4.png)

![Review in Print: Maripaz Villar brings a delightfully unique style to the world of WEBTOON [MUSE]](https://hilite.org/wp-content/uploads/2023/12/maripazcover-1200x960.jpg)

![Review: “The Sword of Kaigen” is a masterpiece [MUSE]](https://hilite.org/wp-content/uploads/2023/11/Screenshot-2023-11-26-201051.png)

![Review: Gateron Oil Kings, great linear switches, okay price [MUSE]](https://hilite.org/wp-content/uploads/2023/11/Screenshot-2023-11-26-200553.png)

![Review: “A Haunting in Venice” is a significant improvement from other Agatha Christie adaptations [MUSE]](https://hilite.org/wp-content/uploads/2023/11/e7ee2938a6d422669771bce6d8088521.jpg)

![Review: A Thanksgiving story from elementary school, still just as interesting [MUSE]](https://hilite.org/wp-content/uploads/2023/11/Screenshot-2023-11-26-195514-987x1200.png)

![Review: "When I Fly Towards You", cute, uplifting youth drama [MUSE]](https://hilite.org/wp-content/uploads/2023/09/When-I-Fly-Towards-You-Chinese-drama.png)

![Postcards from Muse: Hawaii Travel Diary [MUSE]](https://hilite.org/wp-content/uploads/2023/09/My-project-1-1200x1200.jpg)

![Review: "Ladybug & Cat Noir: The Movie," departure from original show [MUSE]](https://hilite.org/wp-content/uploads/2023/09/Ladybug__Cat_Noir_-_The_Movie_poster.jpg)

![Review in Print: "Hidden Love" is the cute, uplifting drama everyone needs [MUSE]](https://hilite.org/wp-content/uploads/2023/09/hiddenlovecover-e1693597208225-1030x1200.png)

![Review in Print: "Heartstopper" is the heartwarming queer romance we all need [MUSE]](https://hilite.org/wp-content/uploads/2023/08/museheartstoppercover-1200x654.png)